AprilTags 3D: Dynamic Fiducial Markers for Robust Pose Estimation in Highly Reflective Environments and Indirect Communication in Swarm Robotics

Although fiducial markers give an accurate pose estimation in laboratory conditions, where the noisy factors are controlled, using them in field robotic applications remains a challenge. This is constrained to the fiducial maker systems, since they only work within the RGB image space. As a result, noises in the image produce large pose estimation errors. In robotic applications, fiducial markers have been mainly used in its original and simple form, as a plane in a printed paper sheet. This setup is sufficient for basic visual servoing and augmented reality applications, but not for complex swarm robotic applications in which the setup consists of multiple dynamic markers (tags displayed on LCD screen). This paper describes a novel methodology, called AprilTags3D, that improves pose estimation accuracy of AprilTags in field robotics with only RGB sensor by adding a third dimension to the marker detector. Also, presents experimental results from applying the proposed methodology to swarm autonomous robotic boats for latching between them and for creating robotic formations.

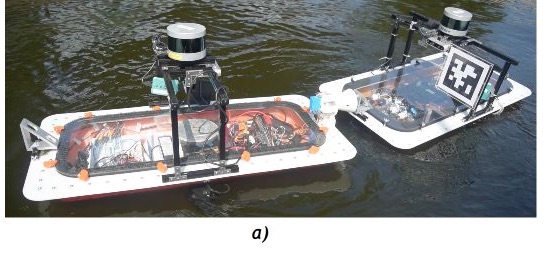

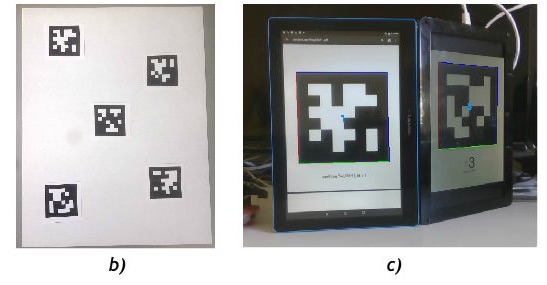

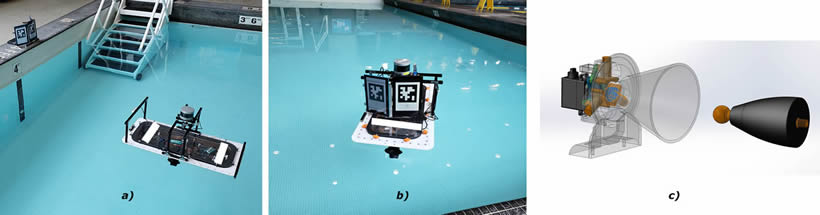

A) Classic AprilTag printed in paper sheet used for pose estimation in autonomous latching with robotic boats. B) Tag bundle setup with five markers for more accurate pose estimation (the markers are set in the same plane). C) AprilTags3D setup with a couple of markers rotated each other. The markers are displayed on LCD screens and are able to change their tagID to indirectly communicate their state to other robots without losing their position and orientation.

A robotic setup integrating cameras and sensors may be validated in laboratory conditions, however, this may fail when used outdoors in real world conditions. This is due to the noises from the environment that cannot be fully tested in indoor setups, such as sunlight illumination and reflections of light from other objects. The aim of AprilTags3D framework is to minimize the error in markers detection and pose estimation in real case scenarios. The framework consists of two or more tags that are not lying on the same plane, as they are rotated but still linked to each other, preventing that a single light source distort or even cancel the tag detection and pose estimation. The setup consists of at least a couple of markers, one leader marker Tag_leader and one or more follower marker(s) Tag_follower#.

The markers are linked on one axis as if connected by a hinge and rotated certain degrees in a hyperbolic fashion. The degree of rotation is set from the distance and angle study to obtain the best detection rates [19] [15]. Further, the degree of rotation can be set upon the application and knowledge of the working environment of the robot. Hence, the tags are no longer forming a plane, as they become a 3D object. This novel setup enables us to keep the pose estimation even if the environment is extremely noisy. Moreover, we integrate LCD screens to display the tags dynamically, overcoming low light conditions and enabling indirect communication between robots. In this sense, a robot in a swarm can notify other robots about its state by changing the tag displayed on its LCD without affecting the tag detection, neither the pose estimation, see Figure

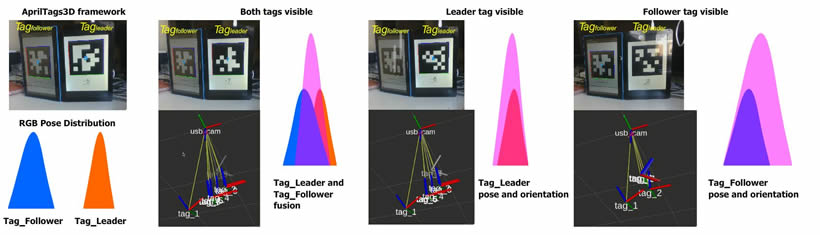

An overview of our proposed method pipeline. Pose estimation from a couple of dynamic markers. The observations are combined according to their uncertainty distribution similar to a Kalman filter for sensor fusion.

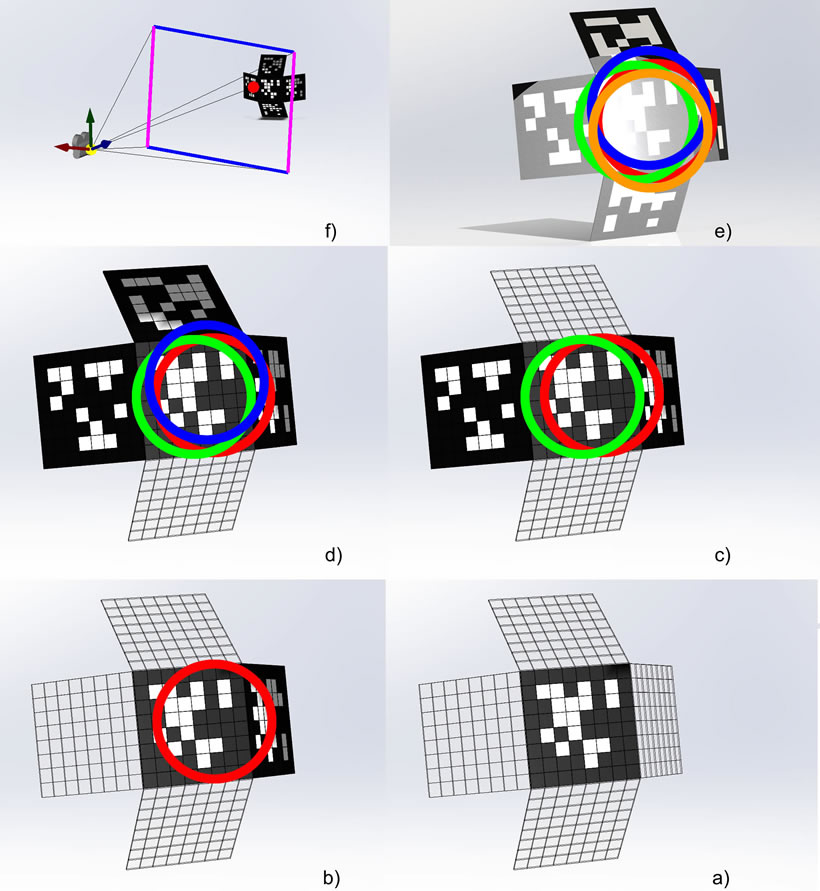

AprilTags 3D. a) Undetectable tag due to direct light reflection. b) Additional tag is detected. c) Tag refines pose. d) Tag improves pose estimation. e) The more the measurement signals become available, the estimation will be more accurate.

A) Autonomous robotic boat guided to docking with AprilTags3D. B) AprilTags3D with a couple of LCD screens on the robotic boat for dynamic latching and indirect communication. C) Latching system with actuated funnel and pin with bearing stud.

The challenge we want to solve with AprilTags3D is to have a reliable marker detection and high accuracy in pose estimation in highly reflective environments. In addition, we want to indirectly communicate the robot’s state with only the dynamic markers in a swarm robotics fashion. We performed three different experiments, in the first two tests we used classic printed AprilTag and our AprilTags3D to compare the results: 1) One robotic boat latch to a docking station in an indoors swimming pool with light reflections from the windows and head lamps. 2) Instead of latching to a docking station, we set two robotic boats to latch outdoors on the open water. 3) We test the indirect communication for swarm robotics with three autonomous robotic boats performing the train link formation with the dynamic AprilTags3D.

The setup consist of one autonomous robotic boat R1 with a RGB camera and an actuated funnel, R1 is located in a position (R1x , R1y ) in the swimming pool. R1z is dismissed, since the controllable space is defined as a 2D plane on the water (Wx,Wy). A docking station with a squared tag dimension l = 0.13m and a pin for latching is located at (Tx,Ty,Tz). The robot’s camera can detect and calculate the pose of the marker, which is located on the docking station a couple of meters apart. In case, the robot fails to latch due to noise in the pose estimation readings, it executes an autorecovery algorithm to reposition itself to retry the docking action [12]. A successful latching is achieved when dx < 10mm, dy < ±40mm and the yaw angle is < ± 27.5°.

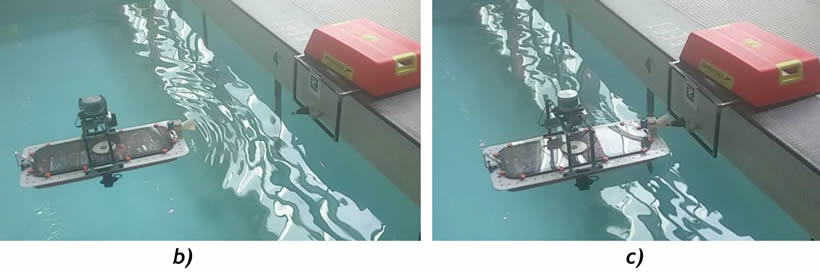

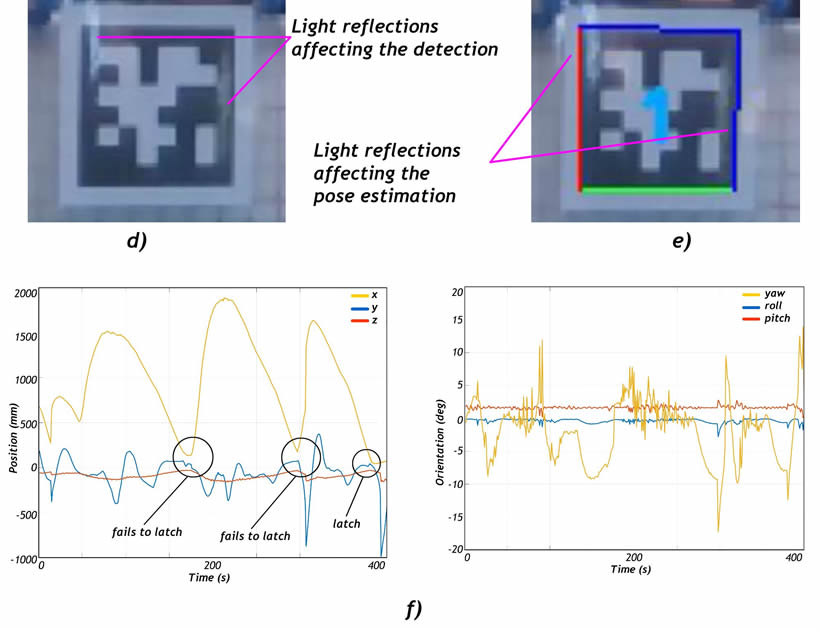

Indoor test with classic printed AprilTag

The robot is initially set 1800mm away from the docking station in a direct line, meaning that the only variable to minimize for latching is the distance in the X − axis, dx = 1800mm, dy = 0 with roll, pitch and yaw RPY = 0. In the swimming pool we registered noisy pose estimation, the yaw angle readings varied from 0.1◦ to 10◦. Making the robot to fail when latching, as the robot needed to try a couple of times before a successful latching. Figure 8 shows the experimental setup and the sources of error from the light reflections on the marker.

Indoor test with dynamic AprilTag3D

We integrated AprilTags3D with a couple of markers on the docking station. The Tagleader is set to replace the printed version with position (Tx,Ty,Tz), while the Tagfollower is connected to the Tagleader on the Z − axis and rotated 10◦ on yaw.

The results show an improved detection and pose estimation, enabling the robotic boat to find and latch to the pin 99% of the time. Table 1 compares the detection rate and pose estimation error with both, the classic AprilTags and the AprilTags3D.

A) Autonomous robotic boat guided with classic AprilTags to latch to a docking station. The marker is used for detection and pose estimation of the docking station. B) The robot is initially set apart 1800mm from the tag in a direct view. C) The robot ultimately fails to dock due to the reflections on the target. D) The camera on the robot registered light reflections on the marker, which introduced extra white lines, affecting the detection. E) The camera on the robot registered light reflections resulting in a noisy pose estimation. F) Position and orientation of the robot when guided for latching.

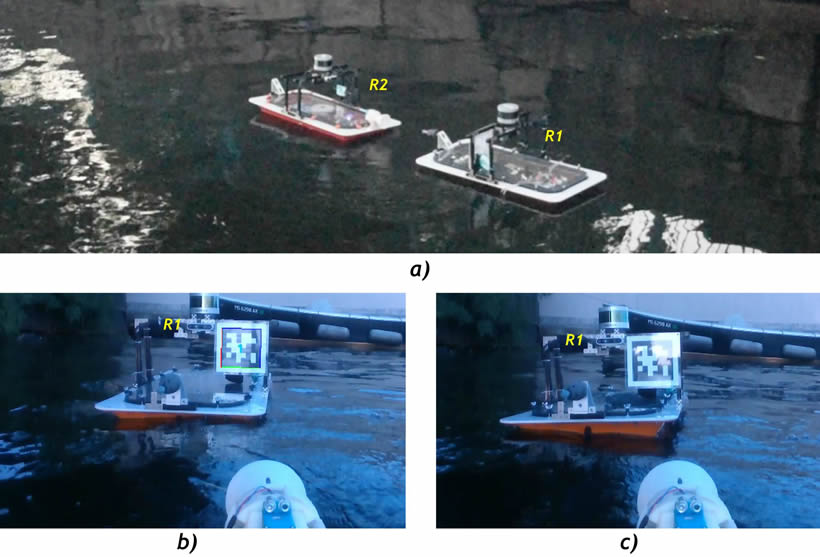

The setup consist of two autonomous robotic boats performing a latching action on the river. The robot carrying the tag R1 tries to maintain its position on (R1x,R1y) from its lidar sensor, with an error in position in the range of ±100mm. While, robot R2 tries to detect the tag, compute the pose estimation and latch to the ”balancing R1 robot”. The robot R1 integrates the tag and the pin, and the robot R2 integrates the camera with the actuated funnel. Initially, R2 is set 2000mm away from R1 in a direct line, similar to the previous experiments in the swimming pool. In the same way, if the robot fails to latch at the first try, it will retry again autonomously until it latches. In this experiments, a successful latch is achieved when dy < ±40mm, yaw angle is < ±27.5° and dx < 500mm. Since, the tag is mounted inside the robot

Outdoors test with classic printed AprilTag

In order to latch the robots, robot R2 is guided by visual cues to a position (x,y). This coordinate must be reach with an error < ±40mm for a successful latching of robot R1. In this challenging task, the robots are not stable on the ground, they are floating on the river, trying to maintain their positions, overcoming waves, wind and water currents. From the camera point of view it is a big challenge to process the 6DOF from the tags located in another boat when it moves with the waves at a degree that the pitch and roll angles vary a few degrees. Further, we are only relying on RGB sensors to perform the latching on the boats. On the river, the main problem with the classic markers was the detection. The marker was detected only 60% of the time, due to the sunlight reflections, see Figure 9. The reason for this low detection rate were the waves, since these created oscillations on both robots, changing their position and inclination, creating multiple random reflections on the tag. In addition, the light reflections from cars on the avenue and boats nearby created an extremely challenging condition for latching the robots. In this real environment R2 required in average five attempts before a successful latching.

Outdoors test with dynamic AprilTag3D

We integrated a couple of tags on the robot R1 . The Tagleader replaced the printed tag, with position (R1Tx,R1Ty,R1Tz),andtheTagfollower wassetsimilartothe indoor experiments. The results revealed a consistent tag detection, detecting at least one tag 95% of time. The framework shows a minimization in the environmental noises and an improved detection rate, see Table below.

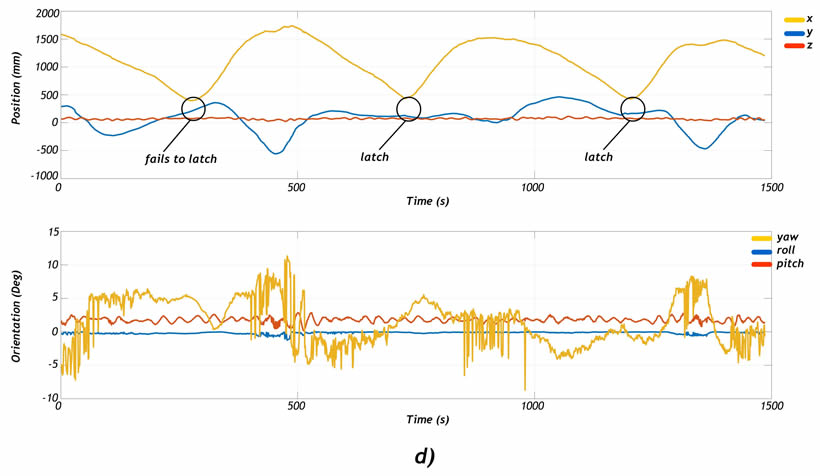

A) Autonomous robotic boat guided with classic printed AprilTags to latch another floating robot in open water. B) The marker is detected with noisy pose estimation from the sky/sun light reflections. C) The marker is not detected from the light reflection that covers one quarter of the marker size. D) Position and orientation from the experiment. In this sequence, the robot initially fails to latch and then latches a couple of times.

We presented a novel methodology of squared fiducial markers called AprilTags3D which improves the detection and pose estimation of the classic AprilTags in field robotics in real environments.

| Tag detection % | Yaw angle error (Deg) | |

|---|---|---|

| Indoors | ||

| AprilTags | 85% | ±4° |

| AprilTags3D | 99% | ±1° |

| Outdoors | ||

| AprilTags | 60% | ±6° |

| AprilTags3D | 95% | ±1° |

We performed multiple tests indoors and outdoors with robotic boats that autonomously latch to a docking station and to another robot with the camera - target principle. The experimental results using both methodologies, revealed that our method improves the detection and pose estimation in real case scenarios. Specially, in highly reflective environment, such as aquatic environments inside the city (rivers and lakes) with sunlight reflections from cars, building and other boats. In the experiments we simplified the 3D space to a 2D plane on the water surface for guiding the robotic boats. Nevertheless, the proposed AprilTags3D framework can be integrated in drones, submarines or space robotics required to navigate in 3D space.